Experts say Facebook's new ad policy won't do much but hurt small political campaigns

The change will preclude advertisers from targeting users based on race, ethnicity, religion, or political affiliation. Experts are already skeptical of the new policy.

Facebook announced a major change to its advertising interface on Nov. 9, barring firms from targeting users based on their race, ethnicity, religion, sexual orientation, or political affiliation.

Experts say the change appears to be somewhat cosmetic, in response to the company’s recently battered public image, and that big firms will be able to circumvent the prohibition easily. But the shift could have a more profound effect on campaigns without a data team at their disposal, which might not be able to navigate the new parameters as effectively.

“It almost certainly is going to have the impact of being another barrier to entry for a lot of campaigns,” Jacob Neiheisel, a professor of political science at the University of Buffalo, told the American Independent Foundation.

“If you’re an under-resourced campaign to begin with, maybe you’re fighting it out for a primary slot, you don’t have a lot of institutional backing, it’s just going to be more costly to do what used to be a pretty simple thing,” he added. “That could price some people out.”

For most campaigns, if they can find someone with a master’s degree in statistics or behavioral science, for example, it should be easy enough to create surveys that ask for demographic information and then build a behavioral model around the answers, Neiheisel explained.

Those analysts could ostensibly figure out what respondents like or where they’re located, along with the demographic information Facebook is blocking, and create a model that targets users based on subcategories connected to a particular group of people. For example, analysts could decide to target anyone who has expressed interest in modern art if they find it’s popular with one of the demographic groups that can’t be targeted under Facebooks restrictions.

“If you’ve got somebody reasonable on your staff who knows anything about behavioral modeling, you can get around this fairly roundly,” Neiheisel said. “Again, that’s going to limit [this] to better resourced types of campaigns. Certainly national campaigns will have this kind of expertise.”

There are other methods available to well-funded campaigns that can’t be accessed by underfunded ones, and expensive firms can tap into a vast market where brokers and trusts sell data that small campaigns simply can’t afford. The Republican Party, for instance, maintains a massive data inventory on more than 300 million people.

Advertisers remain able to upload their own custom lists of users to target. If they’ve purchased voter data that provides information on users’ propensity for violence, for example, or likeliness to respond to fear or provocation, they can use it in their ad targeting. Currently there is no way for Facebook to know how firms created their user lists, so it’s entirely possible they might use some of the demographic categories banned earlier in November.

The change in ad targeting was made, according to Facebook, to improve user privacy and combat discrimination, and “to better match people’s evolving expectations of how advertisers may reach them on our platform.” Graham Mudd, Facebook’s vice president of product marketing, wrote in a blog post that the change was also intended to “address feedback from civil rights experts, policymakers and other stakeholders on the importance of preventing advertisers from abusing the targeting options we make available.”

Privacy advocates were immediately skeptical: Beyond the unintended byproduct of choking out smaller campaigns, they said, Facebook’s advertising operations remain far too opaque.

“What we need is to really ensure that there’s regulation of these influence firms and advertising campaigns,” said Emma Briant, a visiting research associate in human rights at Bard College. “And I don’t think that taking a few of the top targeted advertising tools that Facebook has available down is going to be the answer to that. The whole thing really has to go. But especially the ability to just upload lists of anyone.”

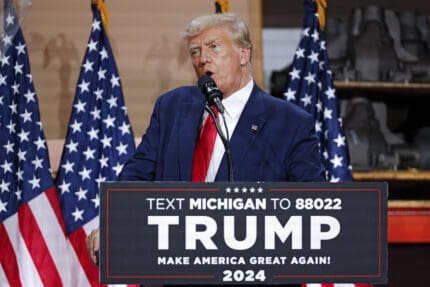

Briant was one of the key researchers that helped expose Cambridge Analytica, a data firm that worked with both former President Donald Trump’s 2016 campaign and pro-Brexit groups, famously harvesting Facebook user data for 50 million users. Cambridge Analytica used that information to create psychological profiles of voters and then target them with ads based on their proclivities in such categories as militarism and violent occultism, pulling from data acquired through seemingly benign personality quizzes, among other things.

While Facebook eventually banned Cambridge Analytica, after the social media giant was assessed a $5 billion fine by the Federal Trade Commission, concerns remain about the platform’s handling of user data for advertising.

How those concerns will shake out in a digital political landscape defined by microtargeting remains to be seen.

“[Microtargeting] enables advertisers to essentially whisper in your ear with the things that mean the most to you,” Briant said. “So it enables them to find the minutiae of who is most likely to respond to their particular narratives that they want to push forward, and to be able to do that in a way where they can learn a lot more about you and be able to shape the dialogue around your concerns, your fears.”

There’s significant motivation there, in other words, to get around the new ad policy.

For the less-resourced campaigns left behind by the parameters, there’s still hope. Neiheisel said political advertisers are constantly innovating and looking for the next best way to reach voters.

Still, the overall frustration with Facebook has only compounded of late, leading to skepticism over even seemingly benign changes like the new ad-targeting policy.

In October, the Wall Street Journal published “The Facebook Files,” a series that cited confidential files from a whistleblower, former Facebook product manager Frances Haugen, that reportedly exposed the company’s secretive attempts at concealing harm caused by its platforms and policies.

Among other things, the series revealed the company’s lackluster response to internal reports of human trafficking on its sites; how Facebook had allowed plagiarized and “stolen” content to “flourish”; its alleged exemptions for “elite” profiles from rules against violent or fake content; and the ways in which Instagram, which Facebook owns, had negatively impacted young women and girls.

In response to the leak, Facebook CEO Mark Zuckerberg claimed in an earnings call that the reports were “a coordinated effort to selectively use leaked documents to paint a false picture of our company.”

Facebook has long been criticized for its lack of transparency around its policies in general, especially in regard to hate speech moderation and utilization of user data.

“It’s hard to take these kinds of policy changes from them as anything other than PR, when, in fact, there is this high level of opaqueness, there is this track record of making decisions that are not in the public interest, that are not in the interest of the safety of theirs users, and a noninterest in creating a more inclusive platform,” Daniel Kelley, the associate director of the Anti-Defamation League’s Center for Technology and Society, told the American Independent Foundation.

In fact, Facebook has changed its policies around microtargeting and political advertising multiple times over the past five years, with little clarity around the actual changes or enforcement.

In 2016, ProPublica reported that advertisers could target users who showed interest in topics like “Jew hater” and “how to burn Jews.” Advertisers were also able to exclude users in racial, ethnic, or age categories from viewing certain ads.

Following the investigations and accusations that excluding users amounted to discrimination, Facebook said it would change that policy. But an investigation by the Markup in 2020 still found ads discriminating against users by age.

“The public, government, civil society have all lost trust in them, so it’s hard to be able to say that any policy change at this point will provide meaningful impact, because we just don’t know,” Kelley said.

He added, “It’s due to their inability to be transparent, their lack of sharing data with researchers, and the torrent of the Facebook Files, and everything over the last several months has just reinforced all of the most cynical takes you can imagine about Facebook and what drives them.”

Published with permission of The American Independent Foundation.

Recommended

More than half of Republican Jay Ashcroft’s funding comes from outside Missouri

Ashcroft has criticized other campaigns for relying on out-of-state donors

By Jesse Valentine - April 25, 2024

Battleground GOP candidates rally around Trump’s tax cuts for the rich

Even Larry Hogan, a Trump critic, supports the former president’s tax policy.

By Jesse Valentine - April 12, 2024

A deleted tweet and a fundraising plea: Mike Rogers bends the knee to Trump

Trump endorsed Rogers’ U.S. Senate campaign on March 12

By Jesse Valentine - March 20, 2024